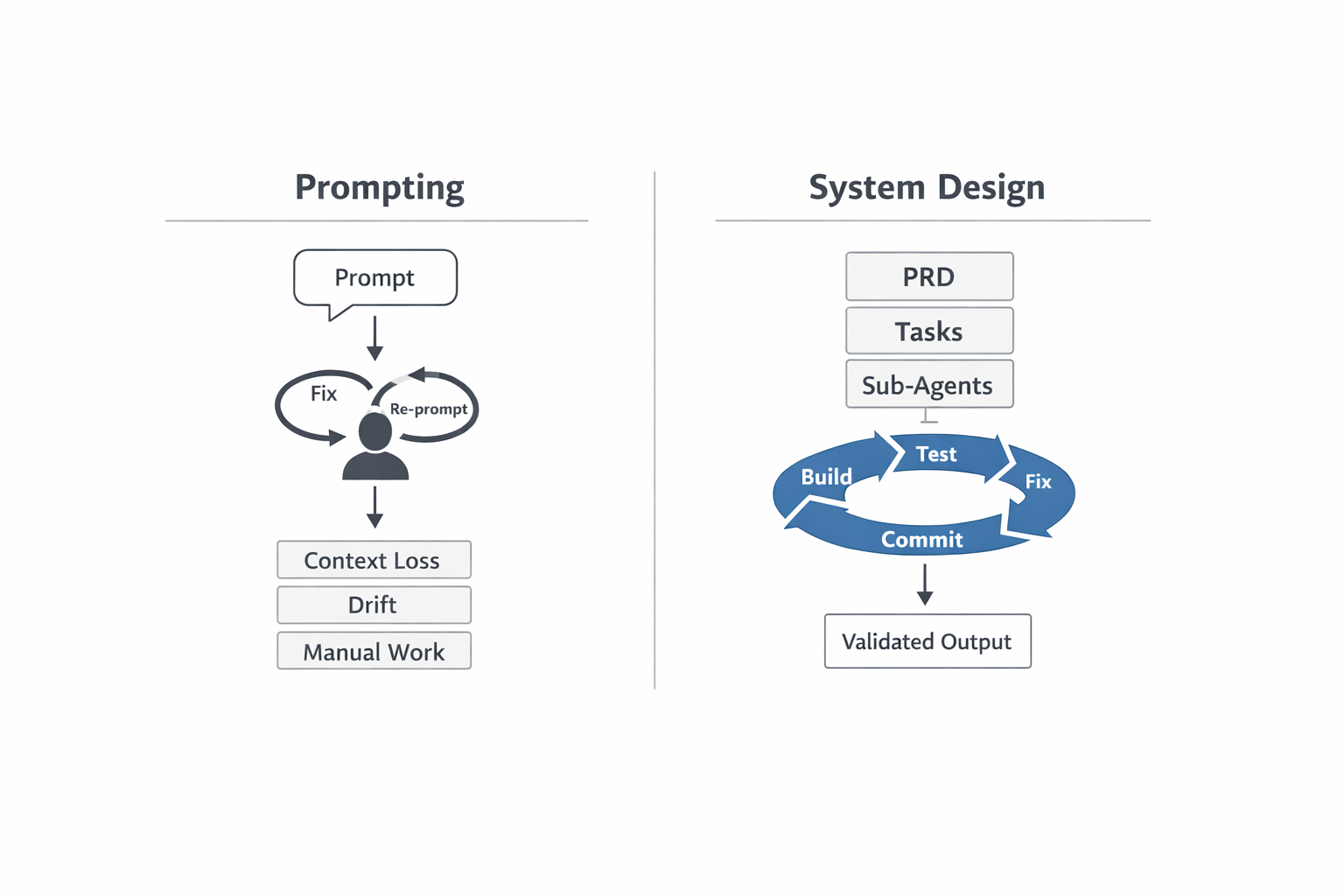

Most people are stuck in the same loop with AI.

They prompt. They tweak. They fix. They re-prompt.

It feels productive. It even works—for a while.

But the moment the task becomes real—multi-step, long-lived, with quality expectations—everything starts to break. Context gets lost. Decisions drift. Outputs become inconsistent. You end up babysitting the model.

This post is about what happens after that phase.

I want to share how I rebuilt my personal website in a few hours using a deterministic AI workflow—and, more importantly, what that process taught me about how to work with AI in marketing, content, and strategy.

This isn't a hype story. It's a systems story.

If you're an AI-forward marketer, founder, or strategist who feels that "prompting harder" isn't the answer anymore, this is for you.

Why I Decided to Rebuild My Website at All

I didn't rebuild my website because I wanted a prettier site.

I rebuilt it because I wanted control.

Social platforms fragment your work. Posts, talks, podcasts, experiments—everything lives in isolation, optimized for feeds, not meaning.

That was fine when humans were the primary audience.

But now we have LLMs.

AI systems are already summarizing who you are, what you do, and what you're "about," based on whatever content they can crawl. If you don't define a clear, authoritative source of truth, you outsource that narrative by default.

A personal website, in this context, isn't about "personal branding."

It's about:

- owning your narrative

- connecting your thinking over time

- creating a durable, indexable source of truth

So I set a constraint: build a fully custom site, fast, without relying on templates or builders.

That constraint forced me to confront something bigger: how I actually work with AI.

The Problem with Prompting (Once You Leave the Shallow End)

Prompting is not useless. It's just limited.

It works well for:

- ideation

- drafts

- one-off tasks

It fails when you try to build systems.

Why?

Because LLMs have hard constraints you can't wish away:

- Context limits – long projects degrade over time

- No durable memory – conversations ≠ projects

- Non-determinism – steps get skipped, tests don't run, quality drifts

When people say "AI is unreliable," what they often mean is:

"My workflow has no structure—no repeatable steps, no validation gates, no way to catch drift before it compounds into failure."

So the real question becomes:

What happens if, instead of better prompts, you design a better process?

Enter Ralph: AI Orchestration, Not AI Chat

To test this, I used a deterministic workflow called Ralph (you can find it here: https://github.com/subsy/ralph-tui).

Ralph is not "another AI tool." It's an orchestrator.

Its job is not to be smart—it's to enforce process.

At a high level, Ralph works with two distinct threads:

1. Planning

Ralph takes:

- a PRD

- supporting specs

And turns them into a concrete task list.

This is where vague goals become concrete tasks—"build a homepage" becomes "create hero section with value props + CTA, build features grid, add testimonials section."

No execution yet—just clarity.

2. Execution

Ralph then takes each task and runs a deterministic loop: build → test → fix → commit

Each step can be handled by specialized sub-agents with scoped responsibilities.

The key difference from "just using ChatGPT" is this:

The workflow is fixed. The model is replaceable.

You could swap GPT-4 for Claude tomorrow—the system still works because the intelligence is in the process, not the model.

Ralph makes sure the process is followed—every time.

The Experiment: Build a Real Website, End to End

I used this workflow to build my personal website.

Not a demo. Not a landing page mockup. A real site with structure, content, SEO, and responsiveness.

I wrote a proper PRD:

- target audience

- tone of voice

- site structure

- constraints and goals

Ralph converted that into tasks. Then executed them, one by one.

The first working version shipped in a few hours of execution time.

But the real value wasn't speed.

It was signal.

What Actually Mattered (Lessons Learned)

1. PRDs Matter More Than Prompts

Where my PRD was clear, execution was strong.

Where it was vague—especially around UI/UX—results drifted.

For example, I wrote "create a hero section" on my first pass—vague and generic. The AI generated three versions, all mediocre. When I updated it to "Hero section: Navy background, centered headline with 3-word hook, gold CTA button positioned lower-right, tagline below"—suddenly the output was production-ready on the first try. Clarity was the multiplier.

The AI didn't "fail." It did exactly what the system allowed.

This was the first big realization:

AI quality is capped by human clarity.

If you don't articulate intent, the system will invent it for you.

2. UI Is a Separate Skill (and Gemini Is Very Good at It)

I learned quickly that UI work benefits from different strengths.

Gemini, in particular, is excellent at UI generation—but only when given:

- concrete references

- mockups

- visual direction

Once I started creating rough mockups first, everything downstream improved.

I started sketching rough wireframes in Figma—nothing fancy, just boxes and labels showing visual hierarchy and CTAs. I added annotations like "gold accent here" and "larger text for headline." I didn't iterate on the mockup—the point was just to externalize intent. When the AI saw the visual direction, it had constraints to work within instead of guessing.

Guessing is expensive. Constraints are cheap.

3. Verify the System Before You Trust It

Before letting Ralph run end-to-end, I ran it step by step.

I verified:

- sub-agent responsibilities

- required skills

- task boundaries

This mattered.

Once I understood how the system behaved, I could safely give it authority.

This is a pattern I now follow everywhere:

Trust comes after observability.

4. TypeScript + Tests Change the Game

Using TypeScript made testing explicit and enforceable.

Tests turned AI output into something I could rely on—not because the model was perfect, but because failure was visible and correctable.

This is where the work stopped feeling experimental.

The Bigger Shift: From Building a Website to Building a Factory

And then it clicked.

I wasn't building a website. I was building a factory.

A machine. An engine that takes a clear thought and produces working output.

Not every time. Not perfectly.

But reliably.

Once you see the shift—from tool to system—you can't unsee it.

Marketing workflows have the same structure as software:

- research ≈ discovery

- briefs ≈ PRDs

- content ≈ implementation

- validation ≈ testing

Today, I'm applying the same Ralph-based principles to marketing:

- orchestrated research

- deterministic content generation

- strategy workflows with validation loops

Here's what that looks like in practice: the research step produces a structured brief (not free-form notes), the content generation step outputs drafts with pre-defined sections (intro, problem, solution, CTA), and the validation step checks for brand voice consistency and engagement potential. Each step is discrete, measurable, and repeatable.

Same architecture. Different domain.

A Practical Framework You Can Use

If you want something concrete, here's the model I now use:

When AI feels unreliable, check this:

1. Is the goal explicit? Not "write the blog post" but "write an 800-word post about AI systems, conversational tone, 5 sections, includes 2 concrete examples."

2. Is the work broken into small, scoped tasks? Big hairy goals fail. Discrete steps succeed.

3. Are responsibilities separated by skill? Different models/agents handle different steps. Don't ask one tool to do everything.

4. Is there a fixed execution loop with validation? Plan → Execute → Test → Fix → Repeat. Process matters more than the model.

5. Is memory externalized (docs, Git, artifacts)? Long conversations degrade. Write things down—briefs, PRDs, commit messages. Make decisions permanent.

If the answer is "no" to most of these, prompting won't save you.

The Real Takeaway

Prompting is not wrong. It's just the shallow end.

The real leverage comes from designing systems that work when you're not watching.

AI won't replace marketers or strategists.

But it will expose who understands process, structure, and intent—and who doesn't.

If you want better results, don't ask:

"What prompt should I use?"

Ask:

"What system would make this repeatable?"

That's the shift.

Here's where to start: Pick one recurring workflow you do—whether it's content planning, research, or strategy calls. Map out the current steps (even if they're messy). Ask yourself: What would make this deterministic? Where do decisions drift? Where could I add validation?

That's not a prompt question.

That's a systems question.

And that's where things actually start to compound.